NEWS

IN BRIEF

Artificial Intelligence (AI) is at the center of “moral panics,” […]

SHARE

Artificial Intelligence (AI) is at the center of “moral panics,” according to Assistant Professor Admire Mare. This technological advancement harnesses the power of computers and machines to mimic the problem-solving and decision-making capabilities of the human mind.

When discussing AI, we refer to technical systems and robots designed to perform tasks traditionally done by humans. These systems can produce content, analyze stories, mine data, transcribe, and even communicate with audiences through chatbots. The current focus of AI evolution lies in generative AI, which encompasses large language modules capable of generating new content, such as text, images, and music, based on learned patterns from existing data.

During the 9th edition of the Hub Unconference at Shoko Festival in September 2023, journalists, human rights defenders, and civic actors expressed concerns about the rapid advancement of AI. They feared AI would take over their jobs, making humans obsolete as machines become increasingly capable and efficient.

“I thought that my job was going to be threatened because AI can write articles, AI can write reports, AI can generate pictures and videos.” said broadcast and print journalist Lynette Manzini.

However, addressing such concerns, Mare, a guest speaker on Journalism in the Age of AI, brought a different perspective. He pointed out that throughout history, new technologies have often triggered what he calls “moral panics.” Mare believes that the current moral panic surrounding AI is rooted in fear and misunderstanding. Rather than succumbing, he argues that we should embrace technology and find ways to moderate and regulate its use. By using AI responsibly and ethically, we can harness its potential to help us achieve our goals.

But What Exactly is a Moral Panic?

It is a widespread fear and often an exaggerated perception of a threat to society’s values, interests, and safety. In the context of AI, the anxiety and panic extend beyond concerns about job replacement, focusing on AI’s impact on governance, democracy, human rights, and journalism. These concerns arise from the realization that AI systems can generate inaccurate or fictitious content and are susceptible to manipulation due to their reliance on training data.

During a panel discussion about how AI can fight fake news, Peter Kimani of Code for Africa proposed a gateway solution to these concerns. Kimani emphasized the need for AI accountability by incorporating watermarks on AI-generated work to promote transparency. Watermarks will allow users to identify AI-generated content, making it easier to discern between human-generated and machine-generated content. Kimani proposed that any software company operating in this space should have an identifier indicating that AI has generated the content.

Other solutions that advocate for coexistence with AI include initiatives like CivicSignal, developed by Code for Africa. CivicSignal utilizes AI-driven language processing tools to analyze the underlying narratives that shape public discourse and perceptions, safeguarding against disinformation and extremism.

Kimani also mentioned the introduction of the CHAT GPT AI classifier earlier this year as an attempt to combat academics presenting AI work as their own. The tool’s accuracy is contested, highlighting the need for ongoing improvement and increased AI efficiency.

Artificial Intelligence applications and capabilities are rapidly evolving. Platforms such as Google Assistant, Siri, and Grammarly demonstrate the potential of AI in various contexts. AI’s role in governance, democracy, journalism, and human rights is expanding, necessitating careful consideration of how to effectively and accountably incorporate AI into these areas of operation.

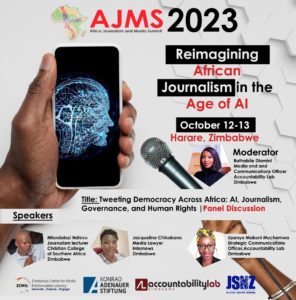

To further explore the impact of AI and advocate for responsible use, ALZ will lead a panel discussion titled “Tweeting Democracy Across Africa: AI, Journalism, Governance, and Human Rights” during the African Journalism and Media Summit (AJMS) on October 13, 2023, from 13:00 to 14:00 Central African Time at Crown Plaza in Harare. This discussion, which you can join virtually, aims to provide insights and strategies on effectively integrating AI into these domains while upholding ethical practices and minimizing potential risks.

The discussion will delve into topics such as the role of AI in journalism, its implications for governance and democracy, and its impact on human rights. This event seeks to encourage responsible and accountable use of AI in our constantly evolving technological landscape by facilitating conversations and promoting understanding.

—

Bathabile Dlamini is the Media and Communication Officer at Accountability Lab Zimbabwe